HTTP 429 Too Many Requests: Learn to Manage Request Limits

The HTTP 429 Too Many Requests status code signals that a client has exceeded the request rate limit allowed by an API

Understanding HTTP 429 Too Many Requests

The HTTP 429 Too Many Requests status code signals that a client has exceeded the rate limit of requests allowed by a server API. This safeguard is vital for preventing abuse of server resources and ensuring service availability

HTTP 429 was first introduced in 2010 in RFC 6585. This was more than a decade after HTTP was first published (RFC 2616), which surprisingly, didn’t include a status code specific for rate limited users.

The common scenarios of HTTP 429

HTTP 429 errors can be triggered by various situations, such as automated scripts, bots, or even legitimate users making too many requests within a short period. If you encountered an HTTP 429 error, review the scenarios below and see if yours matches up:

Automated scripts and bots:\ These are often programmed to make many requests to a server, leading to potential data scraping, DDoS (Distributed Denial of Service) attacks, or unauthorized access to restricted resources.

Heavy traffic from legitimate users:\ If an API becomes popular and experiences a high volume of requests, even from legitimate users, it can strain the server and hinder its performance.

In both cases, implementing API Rate Limiting is essential to maintain the integrity of the API and safeguard against abuse or degraded performance. 429 errors are not a bug - they are the API protecting itself from abuse so it can service other requests.

Avoiding HTTP 429 Too Many Requests Errors

Often times, you can encounter an HTTP 429 error by mistake, through a bug or programming mistake (ex. making API calls in a loop or React useEffect). Implementing the following best practices can help you avoid encountering HTTP 429 errors:

Identify the API's Rate Limits

Most public APIs will document their rate limits, and even include different rate limits depending on which plan you subscribe to. Look through the Terms of Service and API documentation to find what the rate limit for the API you are calling is. This will help you establish an upper-bound on the number of requests you can send from the client.

Strategies to Avoid HTTP 429 Errors

We have a full article dedicated to avoiding API rate limits. Here's a brief summary on strategies you can use to avoid the HTTP 429 Too Many Requests error.

- Identify the root cause: It's key to implement monitoring on external API requests (or utilize monitoring provided by the API itself) to understand why you are sending too many requests. If the rate limit is 1000 requests per minute, do you have 1 application/user sending 1001 requests, or 1001 users sending 1 request?

- Optimize API Requests: If you have a few applications sending many requests to an external API, determine if you can send fewer requests instead. Instead of making multiple individual calls to fetch different pieces of data, consider whether you can consolidate these requests into a single API call. For example, if you need to retrieve information about multiple users, use a batch API endpoint to fetch data for all users in one go.

- Cache your Responses: Avoid calling the API again if the data is not stale. APIs will typically respond with caching headers that indicate how long the data is fresh for. For client-side code, use a request framework like Tanstack Query which automatically handles caching for you.

- Upgrade your API Plan: When all-else fails, it might be time to throw in the towel and pull out your wallet. Many APIs offer higher rate limits for more expensive plans. Chances are, if you are legitimately running up against the rate limits of an API - you will likely need to upgrade soon anyways as your volume approaches your plan's quota.

The strategies above should work whether your source of excessive requests is from a single or many users/applications. If you are still encountering HTTP 429 errors, read on the learn about how to handle them.

Handling HTTP 429 Too Many Requests Errors

Let's say that you tried your best to minimize the number of requests you make and you are still hitting the rate limit. There are strategies you can implement both client-side and server-side to avoid this issue.

Naive Approach: Clientside Rate Limiting

One common approach is to retry the request from the client, with exponential backoff. This involves waiting a short interval before retrying the request and gradually increasing the wait time with each subsequent attempt.

Pros

- Simple to Implement

Cons

- Doesn't handle multiple users/apps simultaneously making calls

- There is wasted time between when the API can start accepting requests again and when you actually fire your backed-off request

Slightly Smarter: Respect Retry-After Header

Some servers, when responding with an HTTP 429 error, include a Retry-After header in the response. This header specifies the amount of time the client should wait before making another request. By adhering to this header value, you won't only minimize the risk of hitting rate limits but also provide a faster user experience.

Pros

- Also simple to implement

Cons

- Doesn't handle multiple users/apps simultaneously making calls

- Strategy relies on all of your external API responding with the header

Here's a brief sample code in Node.js for using the Retry-After header to avoid HTTP 429 errors:

async function makeRequestWithRetry(url, options = {}, retries = 3) {

try {

const response = await fetch(url, options);

if (response.status === 429 && retries > 0) {

const retryAfter = response.headers.get("retry-after");

let delay = 0;

if (retryAfter) {

// Retry-After can be in seconds or a date format

if (!isNaN(retryAfter)) {

delay = parseInt(retryAfter, 10) * 1000;

} else {

const retryDate = new Date(retryAfter);

delay = retryDate - new Date();

}

} else {

delay = 1000; // Default delay if Retry-After is missing

}

console.log(`Rate limit hit. Retrying after ${delay / 1000} seconds...`);

await new Promise((resolve) => setTimeout(resolve, delay));

return makeRequestWithRetry(url, options, retries - 1);

} else if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

return await response.json();

} catch (error) {

throw error;

}

}

In this example, you can iterate on the retry. When the code encounters a 429 error, it will use the Retry-After header to wait before attempting the request again. This approach prevents your app from making too many requests in a short period and risking being blocked by the server.

Smarter Solution: Gateway-level Rate Limiting

Rather than performing rate limiting client-side, one can proxy all external API calls through an API gateway like Zuplo. The gateway can keep track of the number of requests coming through and can throttle the calls it makes to the external API based on the documented rate limits.

Pros

- Handles distributed systems/apps making calls to the same external API

- Additional benefits around authentication and security for your API (ex. you hide your external API key)

Cons

- Introducing an API gateway can introduce complexity and cost to your project

One thing to note is if you need a response from the external API or not. If you don't, you can use a task queue (ex. Celery) which allows you to control task execution (ie. external API calls) rates and implement backoff strategies effortlessly. This allows you to eventually make all the calls you want, so long as the timing they get made isn't important. If you do require a response from the API - you can switch to a Websocket approach, where responses will be streamed back to the client (from the gateway) once the calls are made.

Implementing HTTP 429 Errors and the Retry-After Header

Although they can be annoying for users to deal with, as an API developer, adding HTTP 429 errors to your API is a good way to manage traffic and avoid overloading your system. Here’s how you can do it:

Steps to Implement HTTP 429 Errors and Retry-After

Determine Limit and Duration: Decide how many requests your API can handle in a specific time period.

Track Requests: Use server-side logic to monitor how many requests each user or IP sends.

Check the Requests: Before processing, check if the user has hit the rate limit. You can do this using a library (see our Node.JS example or Python example)

Respond with 429 Status: If the limit is exceeded, respond with an HTTP 429 status code.

Set Retry-After Header: Use the Retry-After header to tell the client when they can try again.

Handle Valid Requests: If the request is within the limit, process it as usual.

What Does an HTTP 429 Response Look Like?

We recommend responding to your users with a Problem Details format API response.

HTTP/1.1 429 Too Many Requests

Content-Type: application/problem+json

Content-Language: en

Retry-After: 5

{

"type": "https://httpproblems.com/http-status/429",

"title": "Too Many Requests",

"detail": "You made too many requests to the endpoint, try again later",

"instance": "/account/12345/msgs/abc",

"trace": {

"requestId": "4d54e4ee-c003-4d75-aba9-e09a6d707b08"

}

}

Rate Limiting + Sending HTTP 429 Responses with Zuplo

At Zuplo, Rate Limiting is one of our most popular policies. Zuplo offers a programmable approach to rate limiting that allows you to vary how rate limiting is applied for each customer or request. Implementing truly distributed, high-performance Rate Limiting is difficult; our promise is that using Zuplo is cheaper and faster than doing this yourself. Here's precisely how to do it:

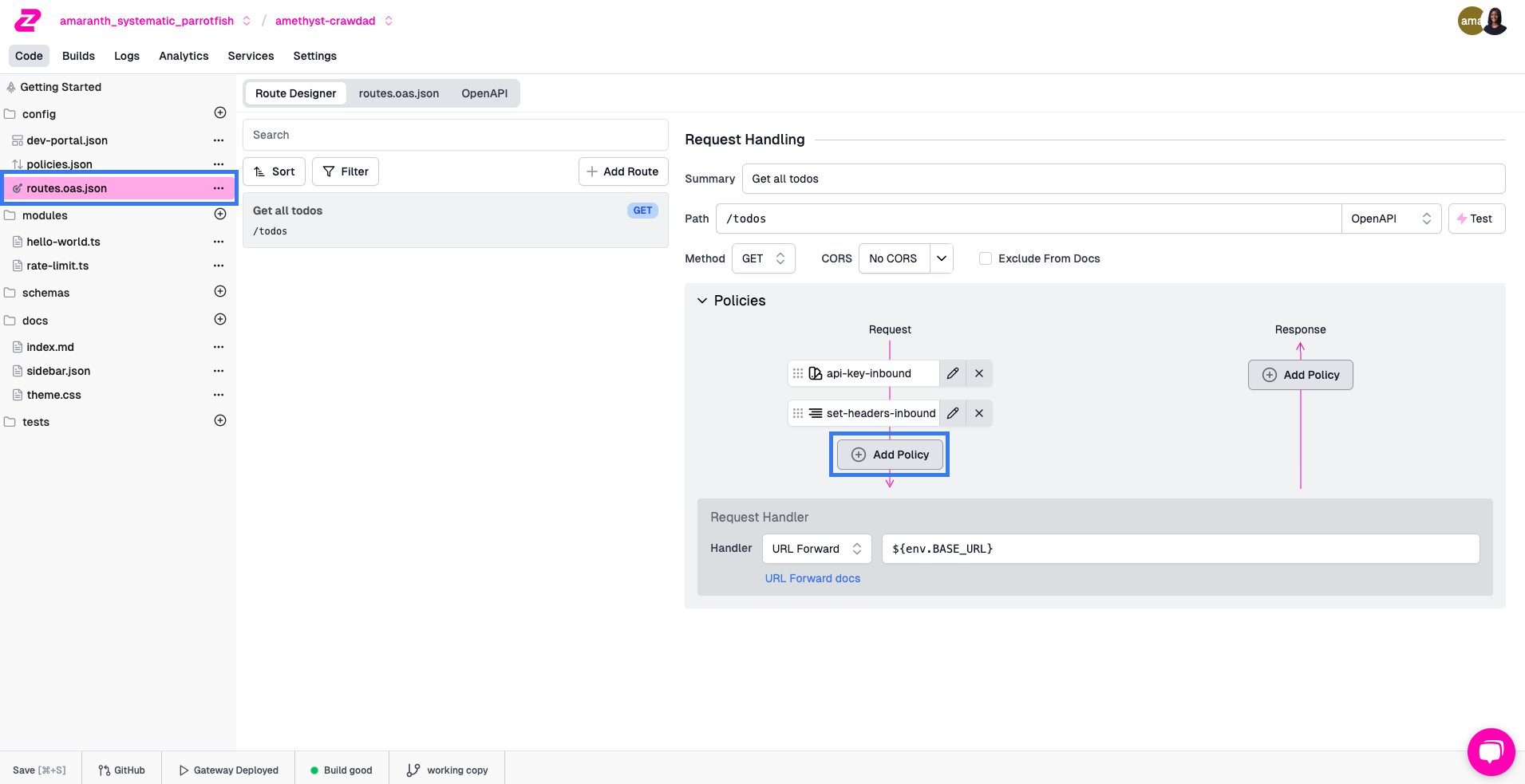

Add a rate-limiting policy

Navigate to your route in the Route Designer and click Add Policy on the request pipeline.

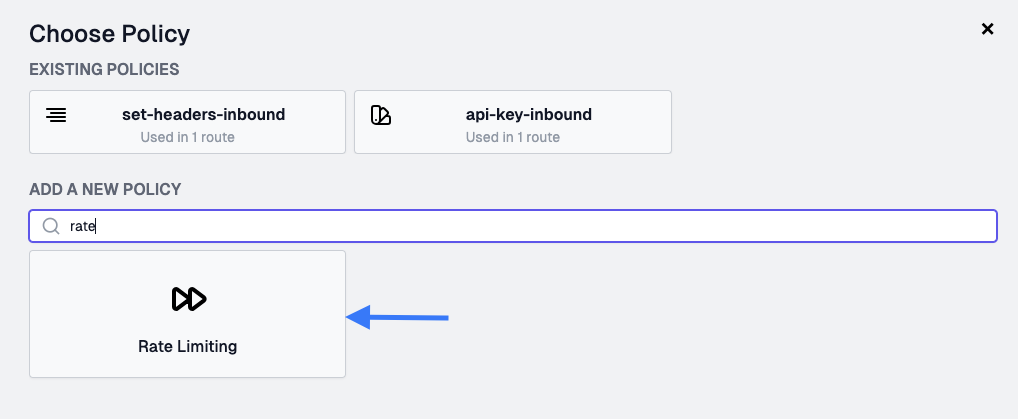

In the Choose Policy modal, search for the Rate Limiting policy.

If you're using API Key Authentication, you can set the policy to rateLimitBy user and allow 1 request every 1 minute.

{

"export": "RateLimitInboundPolicy",

"module": "$import(@zuplo/runtime)",

"options": {

"rateLimitBy":"user",

"requestsAllowed": 1,

"timeWindowMinutes": 1

}

}

Now each consumer will get a separate bucket for rate limiting. At this point, any user that exceeds this rate limit will receive a 429 Too many requests with a retry-after header. Learn more about Rate Limiting with Zuplo.

Considerations for Implementing HTTP 429 and Retry-After

Prevents Overload: Protects your API from being overloaded by too many requests.

Communicates Clearly: Clearly tells clients when they need to slow down.

Avoids Blockages: Encourages clients to wait rather than hammering your API, reducing the risk of getting them banned.

Preserves Experience: Stops a few users from hogging resources, so everyone has a smooth experience.

A Vector for Attack?: Some believe that documenting your rate limits and

retry-afterheader makes it easier for malicious actors to write bots that exploit your API while flying under the rate limit.

Rate limits with HTTP 429 and Retry-After help balance your API’s load, making sure it stays reliable and fair for everyone.